TernFS — an exabyte scale, multi-region distributed filesystem

September 2025

XTX is an algorithmic trading firm: it builds statistical models that produce price forecasts for over 50,000 financial instruments worldwide. We use those forecasts to make trades. As XTX's research efforts to build better models ramped up, the demand for resources kept increasing.

The firm started out with a couple of desktops and an NFS server, and 10 years later ended up with tens of thousands of high-end GPUs, hundreds of thousands of CPUs, and hundreds of petabytes of storage.

As compute grew, storage struggled to keep up. We rapidly outgrew NFS first and existing open-source and commercial filesystems later. After evaluating a variety of third-party solutions, we made the decision to implement our own filesystem, which we called TernFS[1].

We have decided to open source our efforts: TernFS is available as free software on our public GitHub. This post motivates TernFS, explains its high-level architecture, and then explores some key implementation details. If you just want to spin up a local TernFS cluster, head to the README.

Another filesystem?

There's a reason why every major tech company has developed its own distributed filesystem — they're crucial to running large-scale compute efforts, and liable to cause intense disruption if they malfunction. [2]

XTX was in the same position, so we designed TernFS to be a one-stop solution for most of our storage needs, going from relatively 'cold' storage of raw market data to short-lived random-access data used to communicate between GPU jobs running on our cluster.

TernFS:

- Is designed to scale up to tens of exabytes, trillions of files, millions of concurrent clients.

- Stores file contents redundantly to protect against drive failures.

- Has no single point of failure in its metadata services.

- Supports file snapshot to protect against accidental file deletion.

- Can span across multiple regions.

- Is hardware agnostic and uses TCP/IP to communicate.

- Utilizes different types of storage (such as flash vs. hard disks) cost effectively.

- Exposes read/write access through its own API over TCP and UDP, and a Linux kernel filesystem module.

- Requires no external service and has a minimal set of build dependencies. [3]

Specifically, C++ and Go are needed to build the various TernFS components.

The C++ and Go processes depend on a handful of vendored libraries, most notably RocksDB for C++.

Naturally, there are some limitations, the main ones being:

- Files are immutable — once they're written they can't be modified.

- TernFS should not be used for tiny files — our median file size is 2MB.

- The throughput of directory creation and removal is significantly constrained compared to other operations.

- TernFS is permissionless, deferring that responsibility to other services.

We started designing TernFS in early 2022 and began putting it into production in summer 2023. By mid-2024 all of our machine learning efforts were driven out of TernFS, and we're migrating the rest of the firm's storage needs onto it as well.

As of September 2025, our TernFS deployment stores more than 500PB across 30,000 disks, 10,000 flash drives, and three data centres. At peak we serve multiple terabytes per second. To this day, we haven't lost a single byte.

High-level overview

Now that the stage is set, we're ready to explain the various components that make up TernFS. TernFS' core API is implemented by four services:

- Metadata shards store the directory structure and file metadata.

- The cross-directory coordinator (or CDC) executes cross-shard transactions.

- Block services store file contents.

- The registry stores information about all the other services and monitors them.

A ──► B means "A sends requests to B"

┌────────────────┐

│ Metadata Shard ◄─────────┐

└─┬────▲─────────┘ │

│ │ │

│ │ │

│ ┌──┴──┐ │

│ │ CDC ◄──────────┐ │

│ └──┬──┘ │ │

│ │ │ ┌───┴────┐

│ │ └─┤ │

┌─▼────▼────┐ │ Client │

│ Registry ◄──────────┤ │

└──────▲────┘ └─┬──────┘

│ │

│ │

┌──────┴────────┐ │

│ Block Service ◄────────┘

└───────────────┘

In the next few sections, we'll describe the high-level design of each service and then give more background on other relevant implementation details.[4]

Note that TernFS' multi-region capabilities are orthogonal to much of its high-level design, and they're therefore explained separately.

Metadata

To talk about metadata, we first need to explain what metadata is in TernFS. The short answer is: 'everything that is not file contents.' The slightly longer answer is:

- Directory entries, including all files and directory names.

- File metadata including creation/modification/access time, logical file size, and so on.

- The mapping between files and the blocks containing their contents.

- Other ancillary data structures to facilitate maintenance operations.

TernFS' metadata is split into 256 logical shards. Shards never communicate with each other. This is a general principle in TernFS: each service is disaggregated from the others, deferring to the clients to communicate with each service directly.[5]

There are some exceptions — most notably the shards execute requests from the CDC, and all services check into the registry.

A logical shard is further split into five physical instances, one leader and four followers, in a typical distributed consensus setup. The distributed consensus engine is provided by a purpose-built Raft-like implementation, which we call LogsDB, while RocksDB is used to implement read/write capabilities within a physical shard instance.

Currently all reads and writes go through the leader, but it would be trivial to allow clients to read from followers, and with a bit more effort to switch to a write-write setup.

┌─────────┐ ┌─────────┐ ┌───────────┐

│ Shard 0 │ │ Shard 1 │ ... │ Shard 255 │

└─────────┘ │ │ └───────────┘

┌───┘ └───────────────────┐

│ │

│ ┌────────────┐ │

│ ┌───────────┐ │ Replica 0 │ │

│ │ ◄────► (follower) │ │

┌────────┐ │ │ Replica 3 ◄──┐ └────────────┘ │

│ Client ├─┼─► (leader) ◄─┐│ ┌────────────┐ │

└────────┘ │ │ ◄┐│└─► Replica 1 │ │

│ └───────────┘││ │ (follower) │ │

│ ││ └────────────┘ │

│ ││ ┌────────────┐ │

│ │└──► Replica 2 │ │

│ │ │ (follower) │ │

│ │ └────────────┘ │

│ │ ┌────────────┐ │

│ └───► Replica 4 │ │

│ │ (follower) │ │

│ └────────────┘ │

└─────────────────────────────────┘

Each of the 256 shards is composed of five replicas, of which one is elected leader.

The clients only communicate with the leader, and the leader commits changes coordinating with the followers.

Splitting the metadata into 256 shards from the get-go simplifies the design, given that horizontal scaling of metadata requires no rebalancing, just the addition of more metadata servers.

For instance, our current deployment can serve hundreds of petabytes and more than 100,000 compute nodes with just 10 metadata servers per data centre, with each server housing roughly 25 shard leaders and 100 shard followers.

Given that the metadata servers are totally decoupled from one another, this means that we can scale metadata performance by 25× trivially, and by 100× if we were to start offloading metadata requests to followers.

TernFS shards metadata by assigning each directory to a single shard. This is done in a simple round-robin fashion by the cross-directory coordinator. Once a directory is created, all its directory entries and the files in it are housed in the same shard.

This design decision has downsides: TernFS assumes that the load will be spread across the 256 logical shards naturally. This is not a problem in large deployments, given that they will contain many directories, but it is something to keep in mind.[6]

Cross-directory transactions

Most of the metadata activity is contained within a single shard:

- File creation, same-directory renames, and deletion.

- Listing directory contents.

- Getting attributes of files or directories.

However, some operations do require coordination between shards, namely directory creation, directory removal, and moving directory entries across different directories.

The cross-directory coordinator (CDC) performs these distributed transactions using a privileged metadata shard API. The CDC transactions are stateful, and therefore the CDC uses RocksDB and LogsDB much like the metadata shards themselves to persist its state safely.

┌────────┐ ┌──────────┐ ┌───────────┐

│ Client ├─┐ │ Shard 32 │ │ Shard 103 │

└────────┘ │ └────────▲─┘ └─▲─────────┘

┌─────┬────┼───────────┼─────┼─┐

│ CDC │ ┌─▼──────┐ │ │ │

├─────┘ │ Leader ├────┴─────┘ │

│ └─────▲──┘ │

│ │ │

│ ┌──────┴───────┐ │

│ │ │ │

│ ┌─────▼────┐ ┌────▼─────┐ │

│ │ Follower │ .. │ Follower │ │

│ └──────────┘ └──────────┘ │

└──────────────────────────────┘

The CDC, much like a shard, is split into five replicas. The clients send requests to the leader, and the leader executes distributed transactions on the shards, while persisting its state using LogsDB.

In this case the client sent a request involving two shards — Shard 32 and Shard 103.

The CDC executes transactions in parallel, which increases throughput considerably, but it is still a bottleneck when it comes to creating, removing, or moving directories. This means that TernFS has a relatively low throughput when it comes to CDC operations.[7]

Block services, or file contents

In TernFS, files are split into chunks of data called blocks. Blocks are read and written to by block services. A block service is typically a single drive (be it a hard disk or a flash drive) storing blocks. At XTX a typical storage server will contain around 100 hard disks or 25 flash drives — or in TernFS parlance 100 or 25 block services.[8]

Read/write access to the block service is provided using a simple TCP API currently implemented by a Go process. This process is hardware agnostic and uses the Go standard library to read and write blocks to a conventional local file system. We originally planned to rewrite the Go process in C++, and possibly write to block devices directly, but the idiomatic Go implementation has proven performant enough for our needs so far.

The registry

The final piece of the TernFS puzzle is the registry. The registry stores the location of each instance of service (be it a metadata shard, the CDC, or a block storage node). A client only needs to know the address of the registry to mount TernFS — it'll then gather the locations of the other services from it.

In TernFS all locations are IPv4 addresses. Working with IPv4 directly simplifies the kernel module considerably, since DNS lookups are quite awkward in the Linux kernel. The exception to this rule is addressing the registry itself, for which DNS is used.

The registry also stores additional information, such as the capacity and available size of each drive, who is a follower or a leader in LogsDB clusters, and so on.

Predictably, the registry itself is a RocksDB and LogsDB C++ process, given its statefulness.

Going global

TernFS tries very hard not to lose data, by storing both metadata and file contents on many different drives and servers. However, we also want to be resilient to the temporary or even permanent loss of one entire data centre. Therefore, TernFS can transparently scale across multiple locations.

The intended use for TernFS locations is for each location to converge to the same dataset. This means that each location will have to be provisioned with roughly equal resources.[9] Both metadata and file contents replication are asynchronous. In general, we judge the event of losing an entire data centre rare enough to tolerate a time window where data is not fully replicated across locations.

Metadata replication is set up so that one location is the metadata primary. Write operations in non-primary locations pay a latency price since they are acknowledged only after they are written to the primary location, replicated, and applied in the originating location. In practice this hasn't been an issue since metadata write latencies are generally overshadowed by writing file contents.

There is no automated procedure to migrate off a metadata primary location — again, we deem it a rare enough occurrence to tolerate manual intervention. In the future we plan to move from the current protocol to a multi-master protocol where each location can commit writes independently, which would reduce write latencies on secondary locations and remove the privileged status of the primary location.

File contents, unlike metadata, are written locally to the location the client is writing from. Replication to other locations happens in two ways: proactively and on-demand. Proactive replication is performed by tailing the metadata log and replicating new file contents. On-demand replication happens when a client requests file content which has not been replicated yet.

Important Details

Now that we've laid down the high-level design of TernFS, we can talk about several key implementation details that make TernFS safer, more performant, and more flexible.

Talking to TernFS

Speaking TernFS' language

The most direct way to talk to TernFS is by using its own API. All TernFS messages are defined using a custom serialization format we call bincode. We chose to develop a custom serialization format since we needed it to work within the confines of the Linux kernel and to be easily chopped into UDP packets.

We intentionally kept the TernFS API stateless, in the sense that each request executes without regard to previous requests made by the same client. This is in contrast to protocols like NFS, whereby each connection is very stateful, holding resources such as open files, locks, and so on.

A stateless API dramatically simplifies the state machines that make up the TernFS core services, therefore simplifying their testing. It also forces each request to be idempotent, or in any case have clear retry semantics, since they might have to be replayed, which facilitates testing further.

It also allows the metadata shards and CDC API to be based on UDP rather than TCP, which makes the server and clients (especially the kernel module) simpler, due to doing away with the need for keeping TCP connections. The block service API is TCP based, since it is used to stream large amounts of contiguous data, and any UDP implementation would have to re-implement a reliable stream protocol. The registry API is also TCP-based, given that it is rarely used by clients, and occasionally needs to return large amounts of data.

While the TernFS API is simple out-of-the-box, we provide a permissively licensed Go library implementing common tasks that clients might want to perform, such as caching directory policies and retrying requests. This library is used to implement many TernFS processes that are not part of the core TernFS services, such as scrubbing, garbage collection, migrations, and the web UI.

Making TernFS POSIX-shaped

While the Go library is used for most ancillary tasks, some with high performance requirements, the main way to access TernFS at XTX is through its Linux kernel module.

This is because, when migrating our machine learning workflows to TernFS, we needed to support a vast codebase working with files directly. This not only meant that we needed to expose TernFS as a normal filesystem, but also that said normal filesystem API needed to be robust and performant enough for our machine learning needs.[10]

For this reason, we opted to work with Linux directly, rather than using FUSE. Working directly with the Linux kernel not only gave us the confidence that we could achieve our performance requirements but also allowed us to bend the POSIX API to our needs, something that would have been more difficult if we had used FUSE.[11]

The main obstacle when exposing TernFS as a 'normal' filesystem is that TernFS files are immutable. More specifically, TernFS files are fully written before being 'linked' into the filesystem as a directory entry. This is intentional: it lets us cleanly separate the API for 'under construction' files and 'completed files', and it means that half-written files are not visible.

However this design is essentially incompatible with POSIX, which endows the user with near-absolute freedom when it comes to manipulating a file. Therefore, the TernFS kernel module is not POSIX-compliant, but rather exposes enough POSIX to allow many programs to work without modifications, but not all.

In practice this means that programs which write files left-to-right and never modify the files' contents will work out-of-the-box. While this might seem very restrictive, we found that a surprising number of programs worked just fine.[12] Programs that did not follow this pattern were modified to first write to a temporary file and then copy the finished file to TernFS.

While we feel that writing our own kernel module was the right approach, it proved to be the trickiest part of TernFS, and we would not have been able to implement it without some important safety checks in the TernFS core services.[13]

S3 gateway

Almost all the storage-related activity at XTX is due to our machine-learning efforts, and for those purposes the TernFS' kernel module has served us well. However, as TernFS proved itself there, we started to look into offering TernFS to the broader firm.

Doing so through the kernel module presented multiple challenges. For starters installing a custom kernel module on every machine that needed to reach TernFS is operationally cumbersome. Moreover, while all machine-learning happens in clusters housed in the same data centre as TernFS itself, we wanted to expose TernFS in a way that's more amenable to less local networks, for instance by removing the need for UDP. Finally, TernFS does not have any built-in support for permissions or authentication, which is a requirement in multi-tenant scenarios.

To solve all these problems, we implemented a gateway for TernFS, which exposes a TernFS subtree using the S3 API. The gateway is a simple Go process turning S3 calls into TernFS API calls. The S3 gateway is not currently open sourced since it is coupled to authentication services internal to XTX, but we have open sourced a minimal S3 gateway to serve as a starting point for third-party contributors to build their own.

We've also planned an NFS gateway to TernFS, but we haven't had a pressing enough need yet to complete it.

The web UI and the JSON interface

Finally, a view of TernFS is provided by its web UI. The web UI is a stateless Go program which exposes most of the state of TernFS in an easy-to-use interface. This state includes the full filesystem contents (both metadata and file contents), the status of each service including information about decommissioned block services, and so on.

Moreover, the web UI also exposes the direct TernFS API in JSON form, which is very useful for small scripts and curl-style automation that does not warrant a full-blown Go program.

Directory Policies

To implement some of the functionality we'll describe below, TernFS adopts a system of per-directory policies.

Policies are used for all sorts of decisions, including:

- How to redundantly store files.

- On which type of drive to store files.

- How long to keep files around after deletion.

Each of the topics above (and a few more we haven't mentioned) correspond to a certain policy tag. The body of the policies are stored in the metadata together with the other directory attributes.

Policies are inherited: if a directory does not contain a certain policy tag, it transitively inherits from the parent directory. TernFS clients store a cache of policies to allow for traversal-free policy lookup for most directories.

Keeping blocks in check

A filesystem is no good if it loses, leaks, corrupts, or otherwise messes up its data. TernFS deploys a host of measures to minimize the chance of anything going wrong. So far, these have worked: we've never lost data in our production deployment of TernFS. This section focuses on the measures in place to specifically safeguard files' blocks.

Against bitrot, or CRC32-C

The first and possibly most obvious measure consists of aggressively checksumming all TernFS' data. The metadata is automatically checksummed by RocksDB, and every block is stored in a format interleaving 4KiB pages with 4byte CRC32-C checksums.

CRC32-C was picked since it is a high-quality checksum and implemented on most modern silicon.[14] It also exhibits some desirable properties when used together with Reed-Solomon coding.

Peter Cawley's fast-crc32 repository provides a general framework to compute CRC32-C quickly, together with state-of-the-art implementations for x86 and aarch64 architectures.

4KiB was picked since it is the read boundary used by Linux filesystems and is fine-grained while still being large enough to render the storage overhead of the 4byte checksums negligible.

Interleaving the CRCs with the block contents does not add any safety, but it does improve operations in two important ways. First, it allows for safe partial reads: clients can demand only a few pages from a block which is many megabytes in size and still check the reads against its checksum. Second, it allows scrubbing files locally on the server which hosts the blocks, without communicating with other services at all.

Storing files redundantly, or Reed-Solomon codes

We've been talking about files being split into blocks, but we haven't really explained how files become blocks.

The first thing we do to a file is split it into spans. Spans are at most 100MiB and are present just to divide files into sections of a manageable size.

Then each span is divided into D data blocks, and P parity blocks. D and P are determined by the corresponding directory policy in which the file is created. When D is 1, the entire contents of the span become a single block, and that block is stored D+P times. This scheme is equivalent to a simple mirroring scheme and allows it to lose up to P blocks before losing file data.

While wasteful, mirroring the entire contents of the file can be useful for very hot files, since TernFS clients will pick a block at random to read from, thereby sharing the read load across many block services. And naturally files which we do not care much for can be stored with D = 1 and P = 0, without any redundancy.

That said, most files will not be stored using mirroring but rather using Reed-Solomon coding. Other resources can be consulted to understand the high-level idea and the low-level details of Reed-Solomon coding, but the gist is it allows us to split a span into D equally sized blocks (some padding might be necessary), and then generate P blocks of equal size such that up to any P blocks can be lost while retaining the ability to reconstruct all the other blocks.

As mentioned, D and P are fully configurable, but at XTX we tend to use D = 10 and P = 4, which allows us to lose up to any four drives for any file.

Drive type picking

We now know how to split files into a bunch of blocks. The next question is: which drives to pick to store the blocks on. The first decision is which kind of drive to use. At XTX we separate drives into two broad categories for this purpose — flash and spinning disks.

When picking between these two, we want to balance two needs: minimizing the cost of hardware by utilizing hard disks if we can [15], and maximizing hard disk productivity by having them reading data most of the time, rather than seeking.

To achieve that, directory policies offer a way to tune how large each block will be, and to tune which drives will be picked based on block size. This allows us to configure TernFS so that larger files that can be read sequentially are stored on hard disks, while random-access or small files are stored on flash. [16]

Currently this system is not adaptive, but we found that in practice it's easy to carve out sections of the filesystem which are not read sequentially. We have a default configuration which assumes sequential reads and then uses hard disks down to roughly 2.5MB blocks, below which hard disks stop being productive enough and blocks start needing to be written to flash.

Block service picking

OK, we now know what type of drive to select for our files, but we still have tens of thousands of individual drives to pick from. Picking the 'right' individual drive requires some sophistication.

The first thing to note is that drive failures or unavailability are often correlated. For instance, at XTX a single server handles 102 spinning disks. If the server is down, faulty, or needs to be decommissioned, it'll render its 102 disks temporarily or permanently unavailable.

It's therefore wise to spread a file's blocks across many servers. To achieve this, each TernFS block service (which generally corresponds to a single drive) has a failure domain. When picking block services in which to store the blocks for a given file, TernFS will make sure that each block is in a separate failure domain. In our TernFS deployment a failure domain corresponds to a server, but other users might wish to tie it to some other factor as appropriate.

TernFS also tries hard to avoid write bottlenecks by spreading the current write load across many disks. Moreover, since new drives can be added at any time, it tries to converge to a situation where each drive is roughly equally filled by assigning writing more to drives with more available space.

Mechanically this is achieved by having each shard periodically request a set of block services to use for writing from the registry. When handing out block services to shards, the registry selects block services according to several constraints:

- It never gives block services from the same failure domain to the same shard

- It minimizes the variance in how many shards each block service is currently assigned to

- It prioritizes block services which have more available space.

Then when a client wants to write a new span, requiring D+P blocks, the shard simply selects D+P block services randomly amongst the ones it last received from the registry.

One concept currently absent from TernFS is what is often known as 'copyset replication'. When assigning disks to files at random (even with the caveat of failure domains) the probability of rendering at least one file unreadable quickly becomes a certainty as more and more drives fail:

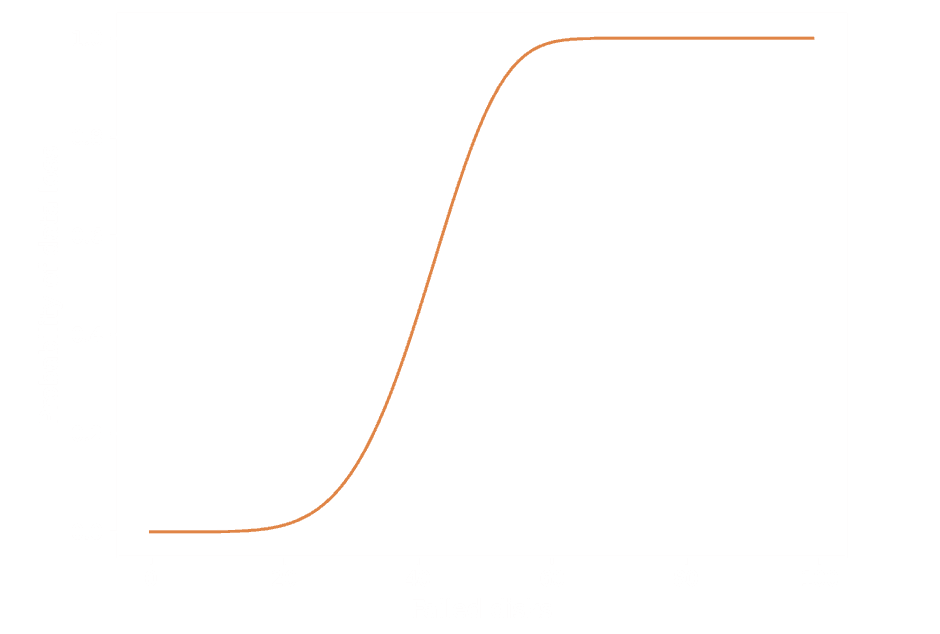

Plot showing probability of losing at least one file on a TernFS deployment with 30,000 drives and 100 billion files stored with 10+4 Reed-Solomon, which gives a rough model of our current TernFS deployment.

The probability is where , and is the hypergeometric distribution with draws, successes, and population size. is a random variable representing the number of blocks which are rendered unreadable for a specific file.

Copysets reduce the likelihood of data loss occurring by choosing blocks out of a limited number of sets of drives, as opposed to picking the drives randomly. This dramatically reduces the probability of data loss[17]. They are generally a good idea, but we haven't found them to be worthwhile, for a few reasons.

Note that while copysets reduce the failure probability, they do not (and cannot) reduce the expected amount of data loss. That is, instead of a large probability of a relatively small amount of data loss we have a very small probability of a catastrophic loss.

First, evacuating a 20TB drive takes just a few minutes, and in the presence of multiple failed drives the migrator process evacuates first the files which are present in multiple failed drives to get ahead of possible data loss. This means that for TernFS to lose data within a single data centre tens of drives would have to fail within a matter of seconds.

More importantly, our TernFS deployment is replicated across three data centres. This replication eliminates the chance of losing data due to 'independent' drive failures — thousands of drives would need to fail at once. Obviously, data centre wide events can cause a large proportion of the drives within it to fail, but having such an event in three data centres at once is exceedingly unlikely.

Finally, copysets are not without drawbacks or complications. Assigning drives at random is an optimal strategy when it comes to evacuating drives quickly, since the files with blocks in the drives to be evacuated will be evenly spread over the rest of the filesystem, and since we only ever need to replace the failed blocks given that we're not constrained by fitting the new set of blocks in predetermined copysets. This means that the evacuation procedure will not be bottlenecked by drive throughput, which is what enables evacuation to finish in a matter of minutes. Moreover, the algorithm to distribute drives to shards is significantly simpler and more flexible than if it needed to care about copysets.

However, users that wish to deploy TernFS within a single data centre might wish to implement some form of copyset replication. Such a change would be entirely contained to the registry and would not change any other component.

Block Proofs

We now have a solid scheme to store files redundantly (thanks to Reed-Solomon codes) and protect against bitrot (thanks to the checksums). However, said schemes are only as good as their implementation.

As previously mentioned, TernFS clients communicate their intention to write a file to metadata servers, the metadata servers select block services that the blocks should be written to, and the clients then write the blocks to block services independently of the metadata services. The same happens when a client wants to erase blocks: the client first communicates its intentions to delete the blocks to the right metadata shard and then performs the erasing itself.

This poses a challenge. While verifying the correctness of the core TernFS services is feasible, verifying all clients is not, but we'd still like to prevent buggy clients from breaking key invariants of the filesystem.

Buggy clients can wreak havoc in several ways:

- They can leak data by writing blocks to block services that are not referenced anywhere in the metadata.

- They can lose data by erasing blocks which are still referenced in metadata.

- They can corrupt data by telling the metadata services they'll write something and then writing something else.

We address all these points by using what we call block proofs. To illustrate how block proofs work, it's helpful to go through the steps required to write new data to a file.

- When a client is creating a file, it'll do so by adding its file spans one-by-one. For each span the client wants to add it sends an 'initiate span creation' request to the right metadata shard. This request contains both the overall checksum of the span, and the checksum of each block in it (including parity blocks).

- The metadata shard checks the consistency of the checksum of the span and of its blocks, something it can do thanks to some desirable mathematical properties of CRCs.

- The shard picks block services for the blocks to be written in and returns this information to the client together with a signature for each 'block write' instruction.

- The client forwards this signature to the block services, which will refuse to write the block without it. Crucially, the cryptographic signature ranges over a unique identity for the block (ensuring we only write the block we mean to write), together with its checksum, ensuring we don't write the wrong data.[18]

- After committing the block to disk, the block service returns a 'block written' signature to the client.

- Finally, the client forwards the block written signature back to the shard, which certifies that the span has been written only when it has received the signatures for all the blocks that make up the span. [19]

This kind of scheme was described more generally in a separate blog post.

Similarly, when a client wants to delete a span, it first asks the metadata shard to start doing so. The metadata shard marks the span as 'in deletion' and returns a bunch of 'block erase' signatures to the client. The client then forwards the signatures to the block services that hold the blocks, which delete the blocks, and return a 'block erased' signature. The clients forward these signatures back to the metadata shards, which can then forget about the span entirely.

We use AES to generate the signatures for simplicity but note that the goal here is not protecting ourselves from malicious clients — just buggy ones. The keys used for the signature are not kept secret, and CRC32-C is not a secure checksum. That said, we've found this scheme enormously valuable in the presence of complex clients. We spent considerable efforts making the core services very simple so we could then take more implementation risks in the clients, with the knowledge that we would have a very low chance of corrupting the filesystem itself.

Scrubbing

Finally, if things go wrong, we need to notice. The most common failure mode for a drive is for it to fail entirely, in which case our internal hardware monitoring system will pick it up and migrate from it automatically. The more insidious (and still very common) case is a single sector failing in a drive, which will only be noticed when we try to read the block involving that sector.

This is acceptable for files which are read frequently, but some files might be very 'cold' but still very important.

Consider the case of raw market data taps which are immediately converted to some processed, lossy format. While we generally will use the file containing the processed data, it's paramount to store the raw market data forever so that if we ever want to include more information from the original market data, we can. So important cold files might go months or even years without anyone reading them, and in the meantime, we might find that enough blocks have been corrupted to render them unreadable.[20]

To make sure this does not happen, a process called the scrubber continuously reads every block that TernFS stores, and replaces blocks with bad sectors before they can cause too much damage.

Snapshots and garbage collection

We've talked at length about what TernFS does to try to prevent data loss due to hardware failure or bugs in clients. However, the most common type of data loss is due to human error — the rm —rf / home/alice/notes.txt scenario.

To protect against these scenarios, TernFS implements a lightweight snapshotting system. When files or directories are deleted, their contents aren't actually deleted. Instead, a weak reference to them is created. We call such weak references snapshot directory entries.

Snapshot entries are not be visible through the kernel module or the S3 gateway, but are visible through the direct API, and at XTX we have developed internal tooling to easily recover deleted files through it.[21] Deleted files are also visible through the TernFS web UI.

Given that 'normal' file operations do not delete files, but rather make them a snapshot, the task of freeing up space is delegated to an external Go process, the garbage collector. The garbage collector traverses the filesystem and removes expired snapshots, which involves deleting their blocks permanently. Snapshot expiry is predictably regulated by directory policies.

Keeping TernFS healthy

This last section covers how we (humans of XTX) notice problems in TernFS, and how TernFS self-heals when things go wrong — both key topics if we want to ensure no data loss and notice performance problems early.

Performance metrics

TernFS exposes a plethora of performance metrics through the HTTP InfluxDB line protocol. While connecting TernFS to a service which ingests these metrics is optional, it is highly recommended for any production service.

Moreover, the kernel module exposes many performance metrics itself through DebugFS.

Both types of metrics, especially when used in tandem, have proved invaluable to resolve performance problems quickly.

Logging and alerts

TernFS services log their output to files in a simple line-based format. The internal logging API is extremely simple and includes support for syslog levels out-of-the-box. At XTX we run TernFS as normal systemd services and use journalctl to view logs.

As with metrics, the kernel module includes various logging facilities as well. The first type of logging is just through dmesg, but the kernel module also includes numerous tracepoints for low-overhead opt-in logging of many operations.

TernFS is also integrated with XTX's internal alerting system, called XMon, to page on call developers when things go wrong. XMon is not open source, but all the alerts are also rendered as error lines in logs. [22] We plan to eventually move to having alerts feed off performance metrics, which would make them independent from XMon, although we don't have plans to do so in the short-term.

Migrations

Finally, there's the question of what to do when drives die — and they will die, frequently, when you have 50,000 of them. While drives dying is not surprising, we've been surprised at the variety of different drive failures. [23] A malfunctioning drive might:

- Produce IO errors when reading specific files. This is probably due to a single bad sector.

- Produce IO errors when reading or writing anything. This might happen because enough bad sectors have gone bad and the drive cannot remap them, or for a variety of other reasons.

- Return wrong data. This is usually caught by the built-in error correction codes in the hard drives, but not always.

- Lie about data being successfully persisted. This can manifest in a variety of ways: file size being wrong on open, file contents being partially zero'd out, and so on.

- Disappear from the mount list, only to reappear when the machine is rebooted, but missing some data.

When clients fail to read from a drive, they'll automatically fall back on other drives to reconstruct the missing data, which is extremely effective in hiding failures from the end-user. That said, something needs to be done about the bad drives, and done quickly to avoid permanent data loss.

The TernFS registry allows marking drives as faulty. Faulty drives are then picked up by the migrator, a Go process which waits for bad drives and then stores all its blocks onto freshly picked block services.

TernFS also tries to mark drives as bad automatically using a simple heuristic based on the rate of IO errors the drive is experiencing. The number of drives automatically marked as faulty is throttled to avoid having this check go awry and mark the whole cluster as faulty, which would not be catastrophic but would still be messy to deal with.

Moreover, drives that are faulty in subtle ways might not be picked up by the heuristics, which means that occasionally a sysadmin will need to mark a drive as faulty manually, after which the migrator will evacuate them.

Closing thoughts

At XTX we feel strongly about utilizing our resources efficiently. When it comes to software, this means having software that gets close to some theoretical optimum when it comes to total cost of ownership. This culture was borne out by competing hard for technological excellence when doing on-exchange trading at first, and by our ever-growing hardware costs as our business has grown later.

Such idealized tools might not exist or be available yet, in which case we're happy to be the tool makers. TernFS is a perfect example of this and we're excited to open source this component of our business for the community.

Crucially, the cost of implementation of a new solution is often overblown compared to the cost of tying yourself to an ill-fitting, expensive third-party solution. Designing and implementing a solution serving exactly your needs allows for much greater simplicity. If the requirements do change, as often happens, changes can be implemented very quickly, again only catering to your needs.

That said, we believe that TernFS' set of trade-offs are widely shared across many organizations dealing with large-scale storage workloads, and we hope we'll contribute to at least slowing down the seemingly constant stream of new filesystems.